In the last 12 to 18 months, interest in agentic workflows has surged. What began as chat interactions has grown into systems that navigate software, integrate actions, query data, and write code. The promise lies in agents that can interpret goals and context well enough to automate reasoning across tasks ranging from simple, repetitive work to complex, demanding challenges. The infrastructure has not kept pace though; models advance rapidly, but tooling remains fragmented. Teams hardcode prompts and connectors, and while frameworks handle orchestration, standardizing tool representation is still a bottleneck. This forces agents to guess what tools exist and how to use them, implying that composability is as much a cognitive challenge for models as a technical one.

We have seen a similar pattern before. Early software depended on tightly coupled protocols until APIs introduced reusable interfaces, with SOAP (Simple Object Access Protocol) and XML (eXtensible Markup Language) in the 1990s and REST (Representational State Transfer) simplifying communication in the 2000s. The Language Server Protocol (LSP) in the mid 2010s standardized how editors and language servers exchanged information, making advanced features like autocomplete widely accessible. Each shift made systems easier to connect and extend.

Agents now need a similar leap. Static APIs describe mechanics but not meaning, leaving models without enough context for reasoning. What is emerging instead is a model readable abstraction layer made up of schemas that make tools discoverable, interpretable, and invocable at runtime. Just as LSP standardized how editors and language servers communicate, the Model Context Protocol (MCP) aims to offer a similar layer for agents, which is a standard way for tools to describe themselves to models and clients.

Is Today’s Infrastructure Enough?

A common question is whether existing APIs are sufficient. Models do not operate like traditional programs and cannot rely on endpoints alone, creating a fundamental mismatch between how APIs are designed and how models reason over tools. APIs move data while models need meaning. Models need to understand not just where an endpoint is, but why it exists, how it connects with others, and what to do when things go wrong. OpenAPI specs capture surface details but rarely convey the intent, usage patterns, or failure modes that models need for reliable reasoning. Existing workarounds, as mentioned, often fail at scale, leading to unreliable behavior.

For example, when reviewing a loan application, a model needs to understand which tools handle document parsing, credit scoring, and fraud detection, how outputs from one feed into another, what parameters are required, and how to handle missing or ambiguous data. Without structured context, the system is forced to guess, which compounds errors.

Beyond APIs, agents require other pieces that give models structure and reliability. MCP manifests provide metadata for reasoning, orchestration layers coordinate multi-step workflows, context management tracks state across steps, observability links logs to execution, and identity systems enforce scoped access. Together these shift the model from “calling functions in the dark” to reasoning with tools as part of a system.

Is MCP the Missing Layer?

The Model Context Protocol (MCP) was introduced by Anthropic in November 2024 in response to a growing communication gap between language models, agent frameworks, and external tools. MCP isn’t a new agent framework, orchestration layer, product, or model. It’s a standard that lets tools describe themselves in a consistent way to both clients and language models, and defines a structured process for clients to pass tools, data, and prompts to a model.

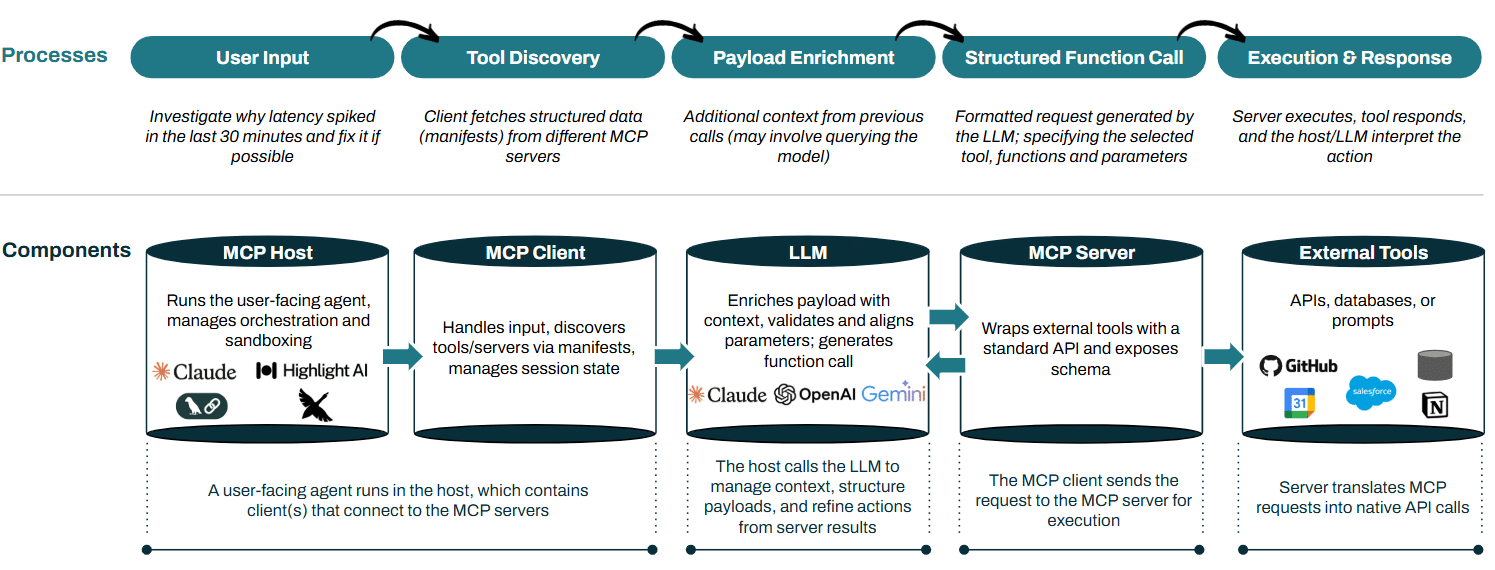

At its core, MCP defines three things:

- Manifests: JSON descriptions of a tool’s functions, parameters, and capabilities.

- Payloads: Structured endpoints for retrieving relevant data at runtime.

- Model-Facing Input: A packaged format combining both manifests and payloads, providing structured input the model can reason over.

This separation allows the model to focus on understanding intent and choosing actions, while the client handles discovery, formatting, and execution. The following diagram illustrates how these components interact in a typical MCP architecture.

MCP Architecture Overview

This approach separates roles cleanly in an agentic system: models focus on interpreting intent and producing structured calls, clients handle execution with tool information, servers manage access, and tools expose their capabilities in a standardized format. Communication is dynamic and iterative, with the model and host/client exchanging messages back and forth. MCP is designed to be model-agnostic and is not tied to Claude or any specific stack.

Instead of replacing frameworks like AutoGen or LangChain, MCP complements them by standardizing how tools, data, and prompts are described and passed to models, letting frameworks focus on planning and orchestration. MCP, however, is still early, with critical pieces like identity, access management, and developer tooling evolving and continuing to take shape.

Mapping the MCP Architecture and Components:

- MCP Host: Hosts are top-level applications where users interact with agents, such as chat apps, IDEs, or dashboards. They embed MCP clients, handle input/output, and coordinate the full interaction between users, models, clients, and tools.

- MCP Clients: Clients are the runtime layer where agents execute and interact with users. They live inside hosts (UI), handle prompt construction, memory, tool planning, and state tracking, and turn model outputs into executable actions.

- MCP Servers: MCP servers wrap external tools in standardized, machine-readable manifests that define each function’s purpose, inputs, and outputs, letting language models reason about their use. They also expose resources like databases or prompt sets to streamline execution.

- Examples: There can be an MCP server for any tool that allows for an API; a Gmail server will enable email exchanges, a Hubspot server will enable making changes to the CRM.

- Models: Models drive reasoning in MCP, choosing tools, order, and interpreting input. The setups aim to be mostly model-agnostic, focusing on following tool schemas and maintaining context.

- Server Generation and Curation: Most APIs are not MCP-ready out of the box. Server generation transforms existing endpoints into standardized MCP servers, creating manifests, payloads, and prompt metadata that models can reason over.

- MCP Marketplaces: Marketplaces serve as registries of MCP-compatible servers, letting agents discover and interact with tools like app stores. They enable agents to find new capabilities based on the task rather than relying on fixed APIs.

- Examples: GPT-5, Claude-4, Gemini 2.5

What We Are Excited About:

1. Evolving Security Infrastructure

As MCP tools move toward modular infrastructure, traditional OAuth shows its limits. OAuth works well for fixed apps with pre-registered clients and static scopes, but agentic systems are different, they spin up short-lived agents that reason, act, and chain tools together. This creates some big security needs:

- Discovery — knowing what agents exist inside the company and where they came from;

- Observability — seeing what the agents are doing in real time and whether they’re following company guidelines and policies; and

- Permissions — giving them only the access they need for a specific task instead of broad, long-lived tokens.

Agent identity is like machine identity with agency. Unlike an API key or server account, it can make decisions and adapt at runtime, which breaks OAuth’s assumptions. Early attempts to bolt OAuth onto MCP failed by mixing resource and authorization roles in the same server, blurring trust boundaries. A more robust approach separates duties: clients handle auth flows, servers enforce checks locally, and a dedicated layer issues and verifies tokens. In fact, we have already started observing early attempts to separate authorization servers from resource servers.

Developers do not want to rebuild routing, session handling, or tenant controls each time; they need a standard way to give agents the right access at the right time. Pre-authorizing a tool’s agents, assuming predictable behavior, falls short when tasks spin up many short-lived agents with varying needs. Memory adds another wrinkle: scoped access can bleed across tasks and revoked tokens do not revoke what agents have already internalized, though in practice this is not yet the main bottleneck as complex agent use cases are still emerging. It remains an open question whether MCP needs native auth constructs or should totally rely on external infrastructure. Middleware that standardizes scoped, task-level access, avoiding broad standing privileges, could be a way to go, with ideas around URL+PKI and delegated authority.

Some exciting and upcoming players in this space include Keycard, Natoma, and Descope for identity (authorization, permissions); Orchid Security, Astrix and Reco for discovery and posture management; RunReveal and Zenity for investigation and monitoring; and our portfolio company Lakera tackling prompt injection and data leakage risks.

We will also be sharing a follow-up piece on agentic security soon, so stay tuned!

2. Composable Infrastructure for Multi-Agent Systems

Agentic workflows often struggle in larger organizations because building and deploying agents is harder than expected, as vertical agents evolve from narrow copilots into broader workflow engines with modular frameworks like MCP and A2A. A coding agent, for example, could move from drafting code to fixing bugs, managing tickets, and logging updates, collapsing workflows that currently span disconnected tools, yet most implementations overestimate agent capabilities, causing context to break, observability to remain shallow, and testing to focus narrowly on prompts. MCP servers face similar issues with client quirks and missing traces, highlighting the need for better tooling for both local and remote development.

Modularity and orchestration remain major bottlenecks, as master–sub-agent designs are still early and fragile, feedback loops and runtime observability are incomplete, and closing the agentic loop to ensure agents can act, observe, and adjust without dropping context continues to be one of the hardest challenges, creating clear opportunities in observability, runtime failure handling, and machine-readable documentation that supports context retention across workflows.

Implementation and guidance add another layer of friction, as technical expertise often mismatches workflow complexity, making adoption difficult, particularly in mid-sized and enterprise organizations, and buy versus build decisions grow more critical as applications evolve, while some form of brittleness persists in emerging companies; like Langfuse is perceived to be doing well with debugging individual steps, yet testing full multi-step agent flows remains challenging. Similarly, Braintrust offers flexible evals, but integrating them across multi-step agents is considered a pain point.

The Road Ahead: Early Power, Unfinished Edges

We believe that with MCP, additional components and opportunities may emerge, though the monetization path remains unclear. Discovery and marketplaces could play a role but tend toward commoditization. Directories like Anthropic’s open registry and platforms such as Smithery already host thousands of servers, making simple listing or creation a low-value play; building server portals is another angle worth exploring. Tool discovery is better served through improved search and recommendation systems embedded directly within agents, shaped by the tools a user already subscribes to. Beyond discovery, MCP opens possibilities for multi-agent orchestration, though these areas remain largely untested at scale. Core needs such as these will persist regardless of which frameworks gain traction, and multiple approaches can coexist.

The real opportunity lies in protocol-agnostic infrastructure that can serve as a stable foundation across agent ecosystems. Beyond that, extensions such as coordinating master agents with task-specific sub-agents, linking observability to workflow state, and enabling continuous feedback represent the most important areas. Tools that reliably support context retention, runtime evaluation, debugging, and versioning are most likely to define lasting infrastructure and drive adoption.

If you’re building in this space or adjacent ones, or exploring where agentic infrastructure is headed, we’d love to connect!

Sources and Influences:

- Introducing the Model Context Protocol; Anthropic (LINK)

- Model Context Protocol (MCP) an overview; Phil Schmid (LINK)

- Model Context Protocol Deep Dive; Practical AI Podcast (LINK)

- Intro to APIs: History of APIs; Postman (LINK)

- Palo Alto Networks LIVEcommunity (LINK)

- Reddit and Hacker News Discussions